ICANN, which regulates the domain name system, is reviewing the renewal of the .ORG registry contract with Public Interest Registry (PIR). It's also running an identical process for .BIZ/INFO/ASIA, but they are of less concern to most people and don't have the long history of existing pre-ICANN that .org does. The proposal was already discussed between PIR and ICANN staff before being put out for comment from stakeholders. This alone is worrisome that the contract is negotiated behind closed doors and without input beforehand.

ICANN's stated goal is bringing the .ORG contract in alignment with the new gTLD base contract; the contract which is used for the hundreds of new extensions released over the past few years. The justification for this is that it's simply easier to manage administratively ("better conform with the base registry agreement") and to treat registry operators equitably between new and legacy gTLDs.

For review, they stated there are 6 material differences from the original contract. The ones that caused the most concern were

- Removing the price cap provisions

- Adding Uniform Rapid Suspension (URS) system, the Trademark Post-Delegation Dispute Resolution Procedure (PDDRP), and the Registration Restrictions Dispute Resolution Procedure (RRDRP).

- The Registry Operator has adopted the public interest commitments and applicability of the Public Interest Commitment Dispute Resolution Process (PICDRP)

I will focus on the biggest issue, removing price caps. The other two issues are well addressed in a statement from the Electronic Frontier Foundation (EFF) and Domain Name Rights Coalition (DNRC) here. To summarize the EFF/DNRC position, URS was designed for new gTLDs and it's not conclusive that it has even been effective. It shouldn't be applied to legacy TLDs like .org for no apparent reason beyond contract standardization. The public interest commitments are turning registries into censorship organizations which goes against ICANN's mission.

ICANN's Russ Weinstein released their own summary report. It picks out some influential and known groups' comments. The EFF/DNRC, along with multiple ICANN groups (Business Constituency, At-Large Advisory Committee, Non-Commercial Stakeholders Group, Intellectual Property Constituency, Registrar Stakeholder Group), National Council of Non Profits, Internet Commerce Association, and a few others.

One of the biggest comments missing is the joint comment against removing price caps from NPR, YMCA, C-SPAN, National Geographic Society, AARP, The Conversation Fund, Oceana and National Trust for Historic Preservation.

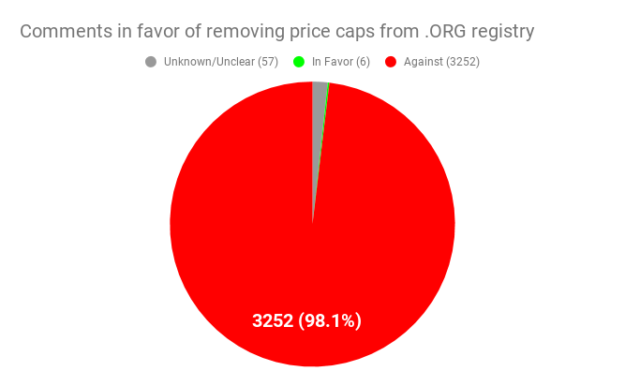

What is also missing is a deeper look into the comments made. They say over 3,200 comments were received. They picked two comments in favor of removing price caps. There were ten comments mentioned that were in against it for various reasons. It appears clear that more people were against it for a variety of reasons.

But is the ratio really 2:10 in favor:against?

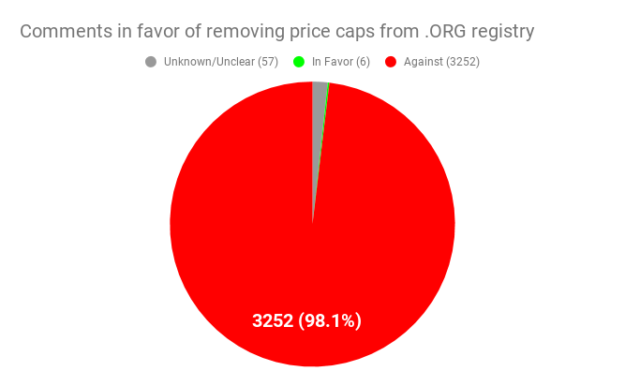

I collected all the comments and analyzed them for discussion about the price caps/protection. I used Mechanical Turk to categorize the vast majority, investigating when the multiple readers couldn't make sense. Full details about how I collected this data will be available in a separate article soon. Long story short, each comment was read by two different individual users in either US/UK/Australia/Canada with over 100 jobs completed and 95% accuracy on the Mechanical Turk platform to make sure they were experienced and based in countries with a native understanding of English.

The outcome was 3252 (98.1%) of people were against removing price caps or giving .ORG the ability to increase pricing. 57 (1.72%) Comments weren't processed for one reason or another (empty emails, foreign languages, unclear what position was being taken, no position on price, comments were attached PDFs, etc). I could only find six (0.02%) comments in favor of the proposed changes out of thousands.

It's overwhelming that nearly everyone is against these changes.

Who is in favor of removing price caps?

Since there are so few comments in favor, I can look at each of them.

"Increased competition and choice has been a major benefit for consumers in

the TLD market. Moving the .org legacy TLD to a new TLD contractual

agreement is also an opportunity to move to more market-based pricing in the domain name space and away from arbitrary price caps. As Makan

Delrahim, head of the Department of Justice’s Antitrust Division, recently

stated, antitrust is there to “support reducing regulation, by encouraging

competitive markets that, as a result, require less government

intervention.” While ICANN is not a regulator, it has had its contracts

reviewed by the DOJ’s antitrust division, which concluded that only .com

had market power in the domain space.

Allowing .org and future domain names to move to market-based pricing makes

sense with today’s healthy TLD market, which is populated with many choices

for consumers to choose from. The .org domain name is well known as one of

the first TLDs in the market available for public registration, but it

still only holds 5.5 percent market share, with just over 10 million names

in the .org space compared to almost 140 million domain names and 75

percent market share for .com."

- Shane Tews / Logan Circle Strategies / American Enterprise Institute

Who is Shane Tews? According to her LinkedIn. Shane was formerly Vice President of Global Public Policy and Government Relations for Verisign, Inc. Her job was to: "Represent Verisign’s interest before United States and International government officials in the Information Communications and Technology Sector. Responsible for the strategic planning and daily management of the Policy and Government Relations efforts for VeriSign globally. Participate in the development of e-commerce policies with International governing bodies, National and State Legislators, International, National and Regional trade associations and Information Technology coalitions. Manage Internet infrastructure, e-commerce, tax, telecommunications, privacy, content, and domain name, and Information Technology regulatory issues. Coordinate Relationship building efforts using third-party coalitions to target audiences to inform and direct interests in Verisign’s issue areas. Disseminate legislative and regulatory and international directive information to Corporate Executives and business unit managers. Direct all political activity. Oversee the daily operation of the VeriSign Political Action Committee and all political fundraising." So she used to represent VeriSign's interests and direct their political and lobby activities. She's in favor of removing price caps for a registry and allowing free market policies that VeriSign has the strongest financial incentive to push for of any registry in existence with it's .com monopoly. Shane also worked at Vrge (just a note for later).

"Given the BC’s established position that ICANN should not be a price regulator, and considering that .ORG and .INFO are adopting RPMs and other registrant provisions we favor, the BC supports broader implementation of the Base Registry Agreement, including removal of price controls.

...

We recommend that whenever price caps are removed, it is important for contracted parties to responsibly keep prices at reasonable levels, to maintain consumer trust and to ensure price predictability for their existing and prospective registrants. It would negatively affect business registrants if contracted parties were to take undue advantage of this greater flexibility by substantially increasing renewal prices for an existing registrant who has significantly committed to its domain name. We therefore urge ICANN and contract parties to ensure domain prices are predictable and within the parameters of the renewal agreement, in order to demonstrate that the removal of price caps was aprudent policy approach. "

- Steve DelBianco / ICANN Business Constituency Drafted with Mark Datysgeld, and Andrew Mack

"The Business Constituency (BC) is the voice of commercial Internet users within ICANN." according to their website. So who is Steve DelBianco? Steve represents NetChoice, a lobbying organization that counts VeriSign as a member. Digging through their 990 they work with Vrge (a strategy/lobbying group) where our friend Shane worked where they spent $255,000 in 2015 (overlapping Shane's tenure) and $150,000 in 2017. The organization lists Jonathan Zuck as a board member (remember his name). Steve DelBianco has been lobbying for VeriSign since as far back as 2007 that I found via NetChoice which pays him $400,000/year according to 2017 filings to do so.

I didn't find much about Mark Datysgeld.

Andrew Mack runs a company called AM Global which lists Public Interest Registry (PIR) as a client.

So representing all business interests at ICANN on this issue are two guys who lobby and represent for the registry operators VeriSign/PIR. If Business Constituency means Registry Businesses, they are doing a good job. It appears that constituency is captured by registry interests.

"I think this is a good idea. Something needs to be done to stop Domain name squatters siting on good names for years and demanding outrageous sums for their release and sale. vastly increasing the prices of domains would go a long way to stopping this practice."

- Martin Houlden

Martin appears to be a web developer in England and his a gripe about speculation and thinks raising prices may solve the issue.

"Proposed price hike for .org

Stop trying to regulate everything under the sun. Leave the free market of

product and services alone."

- Rac Man Radio

It seems to be a deregulation argument but the subject 'Proposed price hike for .org' phrasing hints at not appreciating increasing prices. Could very well be against removing price caps.

"I wholeheartedly agree with you, this should be taken in place."

- Timotej Leginus

Not really clear which parts of things he is agreeing with, but it was an agreement, so it was categorized in favor.

"Raise prices on .ORG!!!

Based on my research, you should raise prices on dotORG domains to $1000/yr and only allow emoji."

- Jon Roig

I think it's pretty apparent this is sarcasm.

Who is for removing price caps?

VeriSign. PIR. And one guy who thinks removing price caps will reduce speculation.

Not only is there virtually no support for this policy, the only people making any argument in favor of removing price caps have captured an ICANN constituency to do it, one that is supposed to represent business interests broadly (not registry interests).

Who Represents Us?

"The At-Large Advisory Committee (ALAC) is the primary organizational home for the voice and concerns of the individual Internet user."

So the average internet user is being represented at ICANN and they issued a statement.

It's at best a wishy-washy here are the two sides argument. The argument in favor of price caps they present:

"Price caps were deliberately instituted in recognition of such first-to-market advantage as the means to prevent foreseeable abuse in pricing of domain name registration renewals on existing registrants. It could be said that once a registrant has registered a domain name and invested resources to build a web presence around the same, the cost of switching that presence onto another domain name could well be significant. Further, in the case of the .org TLD, many registrants rely on their example.org domain names to signify their not-for-profit status, in very much the same way entities in many jurisdictions are obliged to carry suffixes in their names as the means of whether they are publicly-traded companies or privately-held ones.8 (It is also not inconceivable that newly-established not-for-profit organizations would want a .ORG domain name upon which to build their web presence, so the proposition of availability of choice to switch falters with these entities.)

Thus without price caps in place, certain registrants of domain names under these 3 TLDs may foreseeably have no reasonable recourse against their respective registrars/Registry Operators’ action in instituting immediate unrestrained price increases in domain name registrations and/or renewals (as the case may be). "

The argument against price caps:

"On the other hand, while seemly counterintuitive, price increases could be a positive development in the DNS space from the broader end-user perspective. It has been suggested that price caps suppress prices to a point that makes it difficult for new entrants to compete in the TLD space and thus removal of price caps is likely to be good for competition. Price caps also obscure the true value of a domain name and allows the perpetuation of their treatment as commodities, where artificially low prices of domain names keeps the door open to “abuse” – such as confusingly similar strings, typo squatting, phishing and fraud – in detriment to Internet end-users. Hence the removal of price caps is seen by some as a strategic move to boost prices as one way to deter bad actors, even portfolio domain investors, which in turn would increase choices in the primary market for potential registrants. So, while complex economic analysis is well beyond the scope of this comment, an increase in the median price of gTLDs could be good for competition, security and trust in the domain name space.

Also, uncapped pricing may or may not automatically translate to significant price increases, unreasonable increases, pricing beyond the current cap or for that matter any price increases across the board or in any particular TLD.

The ALAC and At-Large have a particular interest in .ORG, due to its connection to the Internet Society. As noted in ALAC’s .NET comment, a significant portion of .ORG registration fees “are returned to serve the Internet community [through] redistribution of .org funds into the community by the Internet Society, to support Internet development.” Notably, this includes support for the IETF, an “organized activity” of the Internet Society (ISOC). The IETF is a critical organization in the development, safety, security, and resiliency of the Internet and the DNS. Furthermore, ISOC’s goals and priorities, while far broader than At-Large (and even ICANN), parallel those of At-Large and the interests of end-users. Many At-Large Structures are also ISOC Chapters, further demonstrating the commonality of interests.

When considering this issue in the context of the .ORG renewal, it is important to note that Public Interest Registry (PIR) has not increased rates at all over the last three years, even though it had the right to increase prices cumulatively by more than 30% during that time period. It is our understanding that when PIR has contemplated an increase in .ORG pricing, the matter has been discussed thoroughly by the Board, which analyzed the pros and cons, taking into account the potential benefits, the impact on the market, and the impact on the image of PIR. "

The argument to get rid of price caps, opens with saying the logic is seemingly counter intuitive; this is because it doesn't make sense or hold up to any real scrutiny. .ORG operates as a monopoly for non profit organizations, there aren't new entrants. The 'competition' from new gTLDs isn't real, millions of non profits are already locked into their brands and websites. .ORG is a utility, price capped and running as a monopoly, with no reason to uncap it beyond greed. There is no evidence showing that legacy TLD prices are in any way related to abuse. In fact, there is evidence quite to the contrary that the new gTLDs are causing the most abuse (source). This is scaremongering and a meritless argument. The argument that increased prices reduce bad actors only to be followed by arguing that PIR isn't interested in raising prices is nonsensical and conflicting. Furthermore, instead of acknowledging the blatant conflict of internet that many Internet Society chapters are involved with ALAC and that the PIR's profits go to the Internet Society, they argue that this is in the best interest of everyone. This is the fox watching the hen house saying foxes watching hen houses is a good idea. Finally, they argue that PIR is a good faith actor because they haven't raised prices at every opportunity in the past three years. What this ignores is the fact they've raised prices above .com already which VeriSign charges $7.85.

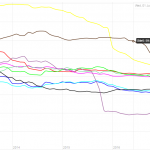

Here is a table of registry prices since 2003:

|

.ORG Price (% Increase) |

.COM Price (% Increase) |

| 2003 |

$6.00 |

$6.00 |

| 10/15/07 |

$6.15 (2.5%) |

$6.42 (7%) |

| 03/04/08 |

|

$6.86 (7%) |

| 11/09/08 |

$6.75 (~10%) |

|

| 12/17/09 |

|

$7.34 (7%) |

| 04/01/11 |

$7.21 (~7%) |

|

| 01/15/12 |

|

$7.85 (7%) |

| 07/01/12 |

$7.45 (~3.5%) |

|

| 07/01/13 |

$8.00 (~7%) |

|

| 07/31/15 |

$8.80 (10%) |

|

| 08/01/16 |

$9.68 (10%) |

|

| Current |

$9.68 |

$7.85 |

" there is no particular reason to believe that PIR will engage in excessive price increases; rather, there are substantial reasons to believe that PIR will consider the public interest and act in a measured and prudent fashion when it considers possible price increases."

PIR has raised prices on non profits higher than VeriSign has on .COM because it was allowed to. VeriSign has had price caps built into the contract and raised them the maximum 7% at every opportunity. PIR raised prices more than VeriSign because their price cap constraints allowed them to. Just because they haven't raised them in the past couple years doesn't change history and facts. Based on the pricing above .COM, it says PIR see's an opportunity to make more profit by raising prices on non profits who are locked in and won't switch to .COM or other gTLDs. They did this before the rush of new gTLDs, which means they understand their monopoly power over non profits and have continued to grow despite .com being cheaper.

If we read further down the statement we reach this:

"Contrastingly, the .BIZ and .INFO TLDs are operated by for-profit registries (i.e. Registry Services, LLC and Afilias Limited, respectively). It is not entirely clear how often and how much the Registry Operators for the .BIZ and .INFO TLDs have raised their prices in the past, and it is unknown how often or how much they will do so in the future. (If the price caps were to be removed for .ORG, .BIZ and .INFO under the call for “standardization”, then it is foreseeable that the .com and .net TLDs (both run by Verisign as registry operator) would also lose their price caps at some point, and there is no way to tell whether Verisign would then increase prices significantly or how often it would do so, even with the knowledge of them having instituted the full 10% annual price increase each year for .NET since at least 2005)."

First off, the people representing ALAC cannot figure out how often prices have been increased before making a statement on price caps? That alone is an embarrassing lack of knowledge when writing about the impact of price caps and talking about actions of registry operators.

The end game is written here though, price cap removal on com/net for VeriSign. Let's be clear, this is the ultimate goal.

The ALAC statement concludes,

"So, we are essentially grappling with competing considerations and uncertainties. After balancing the same, we do not find support for a particular position regarding the removal of price caps."

Given that the statement was written primarily by Internet Society members whose organization would stand to benefit most from removed price caps, it's suspect at best that they cannot pick a side.

Who is behind the statement?

Written originally by Greg Shatan, later with help from Justine Chew and Judith Hellerstein. Some sort of involvement from the Chair of the ALAC Committee, Maureen Hilyard.

Greg Shatan is the President of the Internet Society NY Chapter. Judith Hellerstein is the Director of the Washington DC chapter of the Internet Society. Maureen Hilyard is a board member and used to Chair the board of the Pacific Islands Chapter of the Internet Society.

Here is what Greg Shatan wrote about the thousands of email comments submitted,

"I would not place much weight on the slew of comments sent in on .ORG (and

others). Many of these are “cut-and-paste” comments with identical text.

Others are one-liners. Some are quite ill-informed (one commenter thought

they would have to pay the RO quarterly fee, others have thought that PIR

is a for-profit organization, etc., etc.).

I assume that some commenters sincerely felt threatened. But was this a

credible, well-founded or well-informed fear, or just a trip through a

fun-house designed to get a rise out of the commenters? A great number of

these comments are the direct result of a well-orchestrated campaign, rife

with overblown statements and catastrophic worst-case scenarios. In the

absence of other information, such campaigns can be quite effective in

fomenting fear and then harnessing that fear in order to flood a comments

period. We’ve seen this before. If you wind people up, you can get a lot

of them to go in the direction you want.

It might be too generous to say that “some lobby groups” are behind this.

It appears to be one. A Google search revealed the “engine” used to

generate all of those identical comments, complete with four pre-loaded

variations, and cleverly engineered so that the comment will come from the

sender’s own email account and not from the “engine.” The page is here:

https://www.internetcommerce.org/comment-org/. There are multiple links

from other pages, blogs, social media accounts, etc., to this resource.

These campaigns can reach out in many directions, in different places and

in different guises. It can take a great deal of discernment to recognize

them for what they are and to resist them. I hope that the CPWG

collectively can be discerning." - Greg Shatan

Greg Shatan disregards at a minimum 98% of all the opinions expressed and thinks it's best to represent the PIR and VeriSign connected lobbyists and organizations. Discerning means ignoring the public internet and serving the interests of the registries and those who profit from them (Internet Society, of which he is a member).

This person represented ALAC on the matter as pen holder, which is supposed to represent the concerns and voices of the individual internet user. He has openly said he doesn't believe what internet users are saying and wants to resist them.

What happened when you claim an organization is captured?

Apparently it's insulting and a violation of ICANN code of conduct. The person who found this most offensive was Jonathan Zuck, a (former?) board member at NetChoice, the same organization Steve DelBianco operates which is a VeriSign lobby group. Jonathan was heavily involved in the discussion about the ALAC statement. Jonathan has a long history of lobbying and was described in Wikileaks for,

"This file is an edited version of the EU OSS Strategy draft with the input of Jonathan Zuck, President of the Association for Competitive Technology, an organisation that has strong ties with Microsoft.

The file is a draft for an expert panel formed by the European Commission. This panel is divided into workgroup (IPR, Open Source, digital life, etc.) ACT and Comptia have been infiltrating every workgroup, even the one on Open Source (WG 7). They are doing the best they can to drown any initiative that would not only promote OSS in Europe but also that could help Europe create a sucessful European software sector." (source)

Does this sound familiar? Infiltrating every working group to push the interests of an organization. Jonathan is Co-chair, At-large Consolidated Policy Working Group, so he worked closely in writing the ALAC statement. He has strong ties to VeriSign which are disclosed.

Conclusion

The public interest is at best being represented, in majority, by people tied up in potential conflicts of interest in the given matter. At worst, it looks like special interest groups for VeriSign and The Internet Society(ISOC)/Public Interest Registry have captured multiple groups at ICANN and are trying to use it to line their organizations' pockets.

This appears to be a case study in regulatory capture. Beyond public outcry, there appears to be very little stopping ICANN from simply pushing through these contracts despite overwhelming evidence that the average internet user isn't in favor of these changes. There is virtually no meaningful argument in favor of removing price caps, unless you accept that it would be administratively easier for an organization with hundreds of millions of dollars in the bank. There is a bizarre free market argument that falls apart when you realize the cost of lock-ins for using a domain name and creating a web presence on any domain. .ORG is a thirty million dollar subsidy to the Internet Society (via PIR) which outsources, via competitive bid, the actual registry services. And they want the ability to charge more, at the expense of every other non profit. Their interests strongly align with VeriSign, who most likely see .ORG as the last hurdle before getting .COM and .NET to have price caps removed and increase their bottom line even more in their non competitive monopoly contract.

The only step left before these changes go into effect, that I am aware of, is board approval. I think it probably will go through unless there is a tremendous amount of attention brought to the issue. I'm not only concerned about price caps on .ORG, but structurally the only people who can invest so much time and money participating in ICANN are the organizations which stand to financially gain the most. Who is representing the public interest? Registry groups appear to have a strong voice within the ICANN community. Finally, I also worry about potential conflicts of interest at the board level, which has twenty members. Five ICANN board members were or are connected to ISOC (1,2,3,4,5). One is a former board member of PIR as well. I hope they recognize their responsibility to do what is right for everyone and not the select few registry operators in this instance.

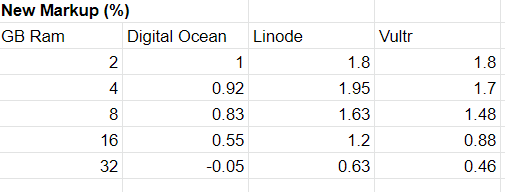

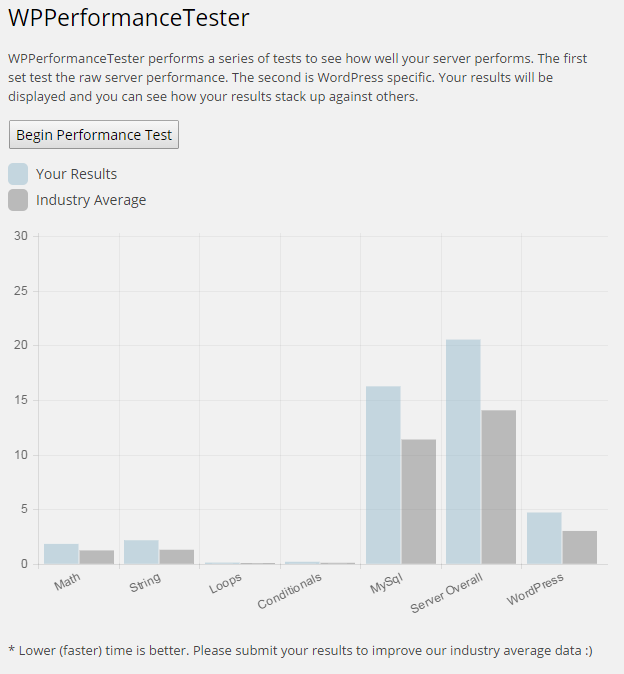

WordPress & WooCommerce Hosting Performance Benchmarks 2021

WordPress & WooCommerce Hosting Performance Benchmarks 2021 WooCommerce Hosting Performance Benchmarks 2020

WooCommerce Hosting Performance Benchmarks 2020 WordPress Hosting Performance Benchmarks (2020)

WordPress Hosting Performance Benchmarks (2020) The Case for Regulatory Capture at ICANN

The Case for Regulatory Capture at ICANN WordPress Hosting – Does Price Give Better Performance?

WordPress Hosting – Does Price Give Better Performance? Hostinger Review – 0 Stars for Lack of Ethics

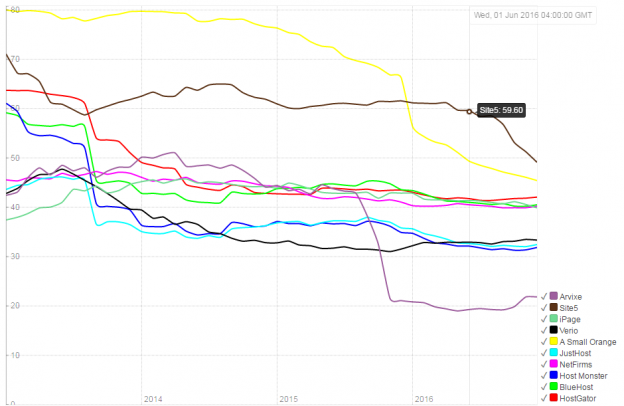

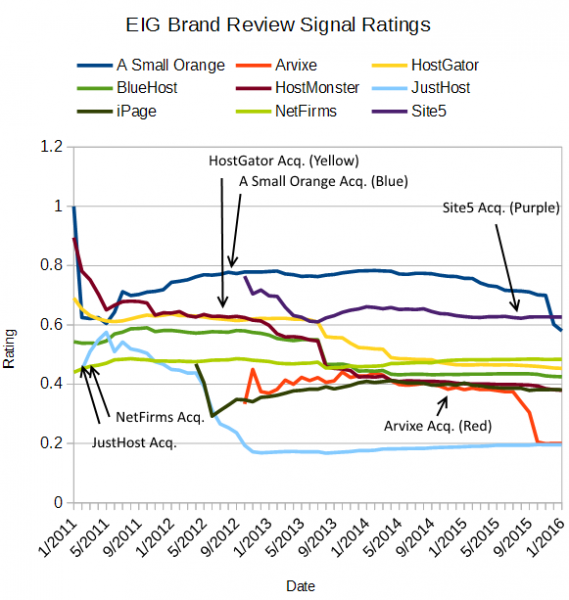

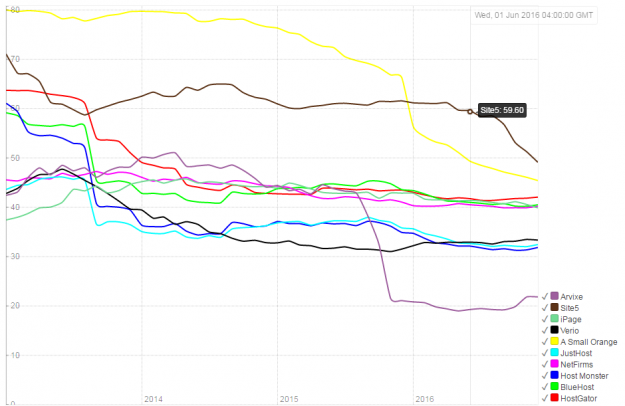

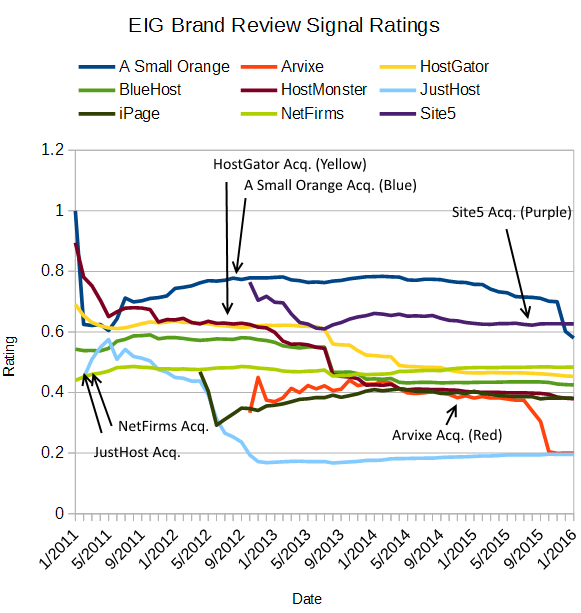

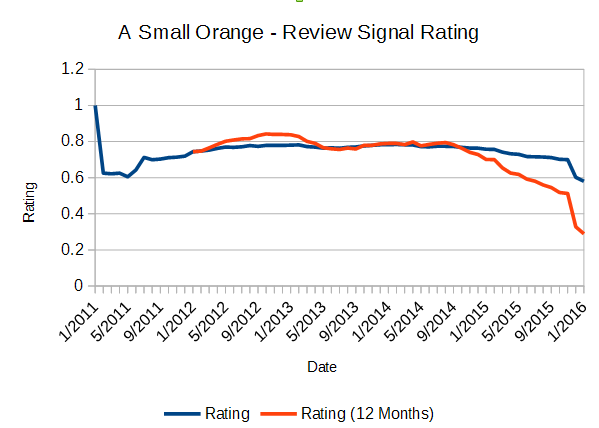

Hostinger Review – 0 Stars for Lack of Ethics The Sinking of Site5 – Tracking EIG Brands Post Acquisition

The Sinking of Site5 – Tracking EIG Brands Post Acquisition Dirty, Slimy, Shady Secrets of the Web Hosting Review (Under)World – Episode 1

Dirty, Slimy, Shady Secrets of the Web Hosting Review (Under)World – Episode 1 Free Web Hosting Offers for Startups

Free Web Hosting Offers for Startups