I recently tested many of the biggest names in managed WordPress hosting in my article Managed WordPress Hosting Performance Benchmarks. (Update: 2016 WordPress Hosting Performance Benchmarks) I am preparing to do a second round of testing with double the number of companies on board. Some of us like to setup servers ourselves (or are cheap).

Given a reasonable VPS, what sort of performance can we get out of it?

10 million hits as measured by Blitz.io was the benchmark to beat based on a previous iteration of this question.

I decided to test this from the ground up, let's start with the most basic configuration and gradually try to improve it.

All tests were performed on a $10/Month 1GB Ram Digital Ocean VPS running Ubuntu 14.04x64. All code and documentation are also available on GitHub.

LAMP Stack

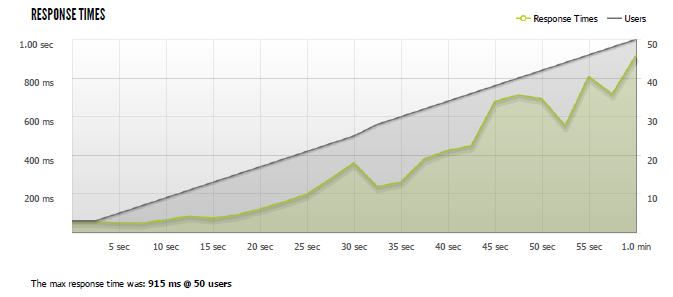

Based on my previous experience benchmarking WordPress, I didn't have high hopes for this test. Last time I crashed MySql almost instantly. This time I ran Blitz a lot slower, from 1-50 users. The performance wasn't impressive, it started slowing down almost immediately and continued to get worse. No surprises.

The LAMP stack setup script is available on GitHub. Download full Blitz results from LAMP Stack (PDF).

LAMP + PHP5-FPM

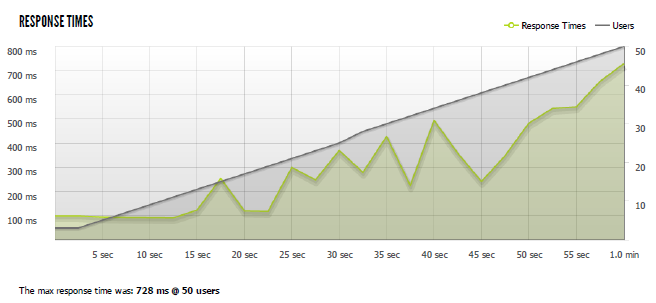

The next thing I tried was PHP-FPM(FastCGI Process Manager). It got slightly better performance with just under 200ms faster response times at 50 users. But the graph looks pretty similar, we're seeing quickly increasing response times as the number of users goes up. Not a great improvement.

The LAMP+ PHP5-FPM setup script is available on GitHub. Download full Blitz results from LAMP+PHP5-FPM (PDF).

Nginx + PHP-FPM (aka LEMP Stack)

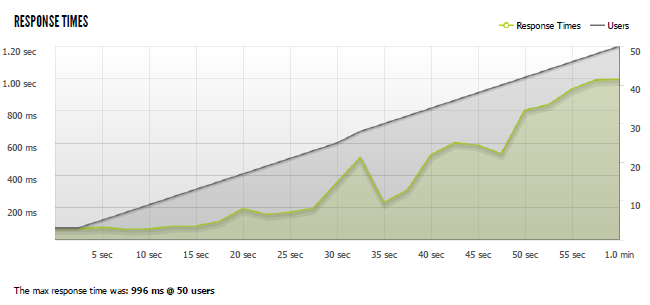

Maybe the problem is Apache? I tried Nginx next. What happened? I got a worse performance than the default LAMP stack (wtf?). Everyone said Nginx was faster. Turns out, it's not magically faster than Apache (and appears worse out of the box).

The LEMP + PHP-FPM setup script is available on GitHub. Download full Blitz results from LEMP+PHP-FPM (PDF).

Microcaching

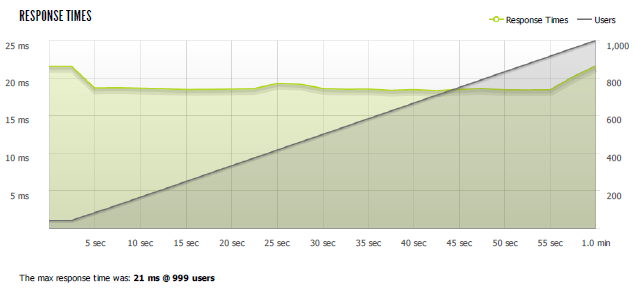

I've written about creating a reverse proxy and cache in nginx before. But I've already setup Nginx as my web server, I don't need to reverse proxy this time. Nginx has fastcgi_cache which allows us to cache results from fastcgi processes (PHP). So I applied the same technique here and the results were staggering. The response time dropped to 20ms (+/- 2ms) and it scaled from 1 to 1000 concurrent users.

"This rush generated 28,924 successful hits in 60 seconds and we transferred 218.86 MB of data in and out of your app. The average hit rate of 482/second translates to about 41,650,560 hits/day."

All that with only 2 errors (connection timeouts).

The LEMP + PHP-FPM + microcaching setup script is available on GitHub. Download full Blitz results from LEMP+PHP-FPM + microcaching (PDF).

Mircocaching Config Walkthrough

We do the standard

apt-get update apt-get -y install nginx sudo apt-get -y install mysql-server mysql-client apt-get install -y php5-mysql php5-fpm php5-gd php5-cli |

This gets us Nginx, MySql and PHP-FPM.

Next we need to tweak some PHP-FPM settings. I am using some one liners to edit /etc/php5/fpm/php.ini and /etc/php5/fpm/pool.d/www.conf to uncomment and change some settings [turning cgi.fix_pathinfo=0 and uncommenting the listen.(owner|group|mode) settings].

sed -i "s/^;cgi.fix_pathinfo=1/cgi.fix_pathinfo=0/" /etc/php5/fpm/php.ini sed -i "s/^;listen.owner = www-data/listen.owner = www-data/" /etc/php5/fpm/pool.d/www.conf sed -i "s/^;listen.group = www-data/listen.group = www-data/" /etc/php5/fpm/pool.d/www.conf sed -i "s/^;listen.mode = 0660/listen.mode = 0660/" /etc/php5/fpm/pool.d/www.conf |

Now make sure we create a folder for our cache

mkdir /usr/share/nginx/cache |

Which will we need in our Nginx configs. In our /etc/nginx/sites-available/default config we add this into our server {} settings. We also make sure to add index.php to our index command and set our server_name to a domain or IP.

location ~ \.php$ { try_files $uri =404; fastcgi_split_path_info ^(.+\.php)(/.+)$; fastcgi_cache microcache; fastcgi_cache_key $scheme$host$request_uri$request_method; fastcgi_cache_valid 200 301 302 30s; fastcgi_cache_use_stale updating error timeout invalid_header http_500; fastcgi_pass_header Set-Cookie; fastcgi_pass_header Cookie; fastcgi_ignore_headers Cache-Control Expires Set-Cookie; fastcgi_pass unix:/var/run/php5-fpm.sock; fastcgi_index index.php; include fastcgi_params; } |

Then we move on to our /etc/nginx/nginx.conf and make a few changes. Like increasing our worker_connections. We also add this line in our http{} before including our other configs:

fastcgi_cache_path /usr/share/nginx/cache/fcgi levels=1:2 keys_zone=microcache:10m max_size=1024m inactive=1h; |

This creates our fastcgi_cache.

All of these are done in somewhat ugly one-liners in the script (if someone has a cleaner way of doing this, please share!), I've cleaned them up and provided the full files for comparison.

Go Big or Go Home

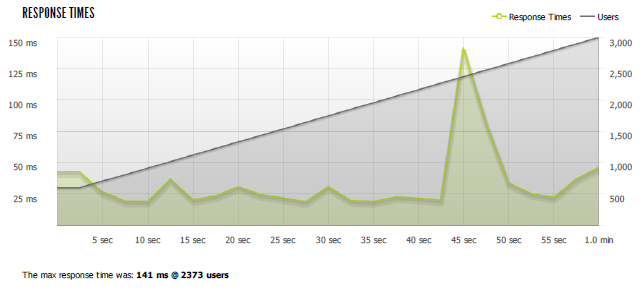

Since Nginx didn't seem to blink when I hit it with 1000 users, I wondered how high it would really go. So I tried from 1-3000 users and guess what?

"This rush generated 95,116 successful hits in 60 seconds and we transferred 808.68 MB of data in and out of your app. The average hit rate of 1,585/second translates to about 136,967,040 hits/day."

The problem was I started getting errors: "4.74% of the users during this rush experienced timeouts or errors!" But it amazingly peaked at an astonishing 2,642 users per second. I watched my processes while the test was running and saw all 4 nginx workers fully maxing out the CPU (25% each) while the test was running. I think I hit the limit a 1GB, 1 Core VPS can handle. This setup was a champ though, I'm not sure what caused the big spike (perhaps a cache refresh), but if you wanted to roll your own WordPress VPS and serve a lot of static content, this template should be a pretty good starting point.

Download full results of 3000 users blitz test (PDF)

Conclusion

There are definitely a lot of improvements that can be made on this config. It doesn't optimize anything that doesn't hit the cache (which will be any dynamic content, most often logged in users). It doesn't talk about security at all. It doesn't do a lot of things. If you aren't comfortable editing php, nginx and other linux configs/settings and are running an important website, you probably should go with a managed wordpress company. If you really need performance and can't manage it yourself, you need to look at our Managed WordPress Hosting Performance Benchmarks. If you just want a good web hosting company, take a look at our web hosting reviews and comparison table.

All code and documentation is available on GitHub

Thanks and Credits:

The title was inspired by Ewan Leith's post 10 Million hits a day on WordPress using a $15 server. Ewan built a server that handled 250 users/second without issue using Varnish, Nginx, PHP-APC, and W3 Total Cache.

A special thanks goes to A Small Orange who have let me test up multiple iterations of their LEMP stack and especially Ryan MacDonald at ASO who spent a lot of time talking WordPress performance with me.

Kevin Ohashi

Latest posts by Kevin Ohashi (see all)

- Analyzing Digital Ocean’s First Major Move with Cloudways - February 28, 2023

- Removing old companies - June 28, 2021

- WordPress & WooCommerce Hosting Performance Benchmarks 2021 - May 27, 2021

WordPress & WooCommerce Hosting Performance Benchmarks 2021

WordPress & WooCommerce Hosting Performance Benchmarks 2021 WooCommerce Hosting Performance Benchmarks 2020

WooCommerce Hosting Performance Benchmarks 2020 WordPress Hosting Performance Benchmarks (2020)

WordPress Hosting Performance Benchmarks (2020) The Case for Regulatory Capture at ICANN

The Case for Regulatory Capture at ICANN WordPress Hosting – Does Price Give Better Performance?

WordPress Hosting – Does Price Give Better Performance? Hostinger Review – 0 Stars for Lack of Ethics

Hostinger Review – 0 Stars for Lack of Ethics The Sinking of Site5 – Tracking EIG Brands Post Acquisition

The Sinking of Site5 – Tracking EIG Brands Post Acquisition Dirty, Slimy, Shady Secrets of the Web Hosting Review (Under)World – Episode 1

Dirty, Slimy, Shady Secrets of the Web Hosting Review (Under)World – Episode 1 Free Web Hosting Offers for Startups

Free Web Hosting Offers for Startups

Nginx is freakin ridiculous when properly configured. This is a must-have part of *any* static website stack. I’ve seen a lot of home-rolled caching solutions that don’t work well (I’m looking at you, WPEngine). Thanks for the easy tips on configuring it.

Is it possible to set up multiple wordpress sites on this set up?

Honestly, I am not sure if it work out of the box. If you wanted to try and share the results, I would be more than happy to share them as an update or separate post. I’ve been focused on single sites (not multi-sites). I assume you’re going to minimally change the nginx configs to respond to multiple domains. Beyond that, I’m really not sure.

Wow.. Can’t wait to try it out.

This is absolutely great for static websites, we (at Kinsta) use a very similar setup! Nginx is awesome. 🙂

The tricky part is getting dynamic websites to perform the same, where static html caching is not an option. And after that, load balancing so your site is truly running 24/7!

Thanks for sharing these great articles Kevin!

Pingback: What Level of WordPress Performance from $10 Hosting?

Thanks for this highly interesting article. I’m going to share this with a hoster we currently work with, were somehow results with NGiNX and caching never quite were what we expected – very fast, but try explaining your client that they have to wait 10 or 60 minutes until some cache expires to see most changes…

BTW, I’m also looking forward to see the second part of your managed WordPress hosting review – I’m especially intrigued to see how Kinsta will hold up. I also hope that you will test GoDaddy; they’re currently ridiculously cheap, and I think offer the exact same thing as MediaTemple: exact same servers, 100% same caching plugin/setup (Batcache and Varnish), minus the Pro stuff like staging and GIT at the moment.

With this setup I was caching for only 30 seconds. It’s designed just to handle massive traffic spikes but very short that content should be updated without any issue.

Second round is beginning tomorrow, Kinsta is on board last I checked. GoDaddy was tested last time. I’ve got them onboard again along with Media Temple (let’s see if there is a difference!).

I’d be really astonished if there were really noticeable differences between GoDaddy and Media Temple – their caching plugin is one and the same (gd-system-plugin.php inside /wp-content/mu-plugin), and server addresses are identical.

Of course, the backend _is_ different, with (mt)s being very elegantly designed, and already feature-enabled where GD is only promising stuff for the future.

I’m actually waiting for any of them have servers in Europe, as even with CloudFlare Railgun enabled (tested with Media Temple) latency is killing speed.

Try this stack:

Ubuntu 14.04

Nginx 1.72

HHVM 3.1.0

MariaDB 10

Nginx has default settings for max concurrents. Set it higher.

Also set max open file limits.

Put Nginx cache in /var/run.

Use a fragment cache for WordPress (by Rarst)

Try without microcache and with, you’ll be amazed.

You can compile nginx with purge module. Using Nginx-Helper plugin you can purge cache on demand.

Do you actually run this and have some benchmarks? I don’t have much time this week to try it, but perhaps I can find some time next week.

Curious if you had to rank the performance improvements here, which ones do you find/think are giving the most boost?

Yes, I run this on my own site as a test (click on my name). I have to mention I’ve added ngx_pagespeed, which adds another layer of optimisation. View the source to see what ngx_pagespeed does to the code. Cache is set to 30 days.

The stack I’ve benchmarked is what I wrote earlier. Performance is about 10 to 20% better versus your scores. Everything is served from the cache, you can ignore the stack behind it as it makes no difference. My guess is the gain is because of finetuning Nginx and Ubuntu to accept more concurrents. Moving to a 2GB server (needed to compile HHVM yourself) will perform even better but during the tests I did I’ve never seen server load go up much. I suspect there is much more to gain from further tuning Ubuntu and Nginx. We need to get the load up to 50% purely by serving concurrents to see what it can actually handle (at 50% I would spin up another droplet in load balancing scenarios).

I see you use “fastcgi_cache_use_stale updating”, that is an important setting. Without it your performance would be much lower in real world scenarios.

For real world scenarios a 1 second cache is enough if the stack behind it is fast enough to serve pages quickly. If a single post/page gets hammered (reddit frontpage or whatever) your server will only generate the page once per second. This is where HHVM and fragment caching comes in. HHVM has a just in time compiler. WordPress will perform much much much better with it. Fragment caching will speed WordPress up a lot too. Add object-cache.php and you’re almost done.

You can fine tune the maximum number of seconds you would need to microcache by counting how many (dynamic) urls your website has, take in account how many concurrents you can handle without a cache and base your valid time on that. “fastcgi_cache_use_stale updating” takes care of speedy page serving while updating the cache in the background. This method will ensure your website almost performs as a true dynamic site without the risk of ever going down from load or visitors seeing old cache.

Just my 2ct’s 😉

I appreciate it! Real world scenarios get a lot more interesting once you’re not serving directly from cache. That’s the next area I’d like to explore. It seems like you’ve done quite a bit in this area, if you’re interested in contributing, I’ve got a public GitHub repo (https://github.com/kevinohashi/WordPressVPS) if you’ve got a setup script for the stack you described, I would love to include it.

Anyways, when I get some time hopefully next week, I am definitely going to be trying some of this stuff. Thanks for all the details on your setup.

This was from an early test: http://imgur.com/aKgnEyQ

Need to retest after further tuning Ubuntu & Nginx.

Kevin and Daan,

Just adding my 2 cents. You can also gain some additional benefits on the DO VPS with some tuning of your /etc/defaults, /etc/sysctl.conf and /etc/security/limits.conf files. I managed to get similar results as yours Kevin without doing any FastCGI caching and using a slightly tweaked LEMP stack.

In short, what you should be looking at is increasing the number of open files your DO virtual machine can handle. I also added some swap space too as MySQL is always a little memory hungry and the performance of DO’s SSD storage is a significant order of magnitude better performing than the old spinning drives.

I’ll probably do a write up soon of the entire configuration when I get a chance. I’ve been super impressed with the uptime and the performance of Digital Ocean.

Look to increase this

Awesome to know. If you want to contribute some of those configs for people to use/see, you’re welcome to submit them to the GitHub project http://github.com/kevinohashi/WordPressVPS

Hi, I’m a Tech noob and I just bought a DigitalOcean droplet. I would like to run that stack. I’ve had a problem installing HHVM 3.0, it says something about not running in a built in web server. I think I can install nginx and mariaDB but I ended up messing up my droplet and having to start again.

would appreciate instructions like

sudo XXXXXXXXX YYYYYY

on the following instructions

Nginx has default settings for max concurrents. Set it higher.

Also set max open file limits.

Put Nginx cache in /var/run.

Increase open files limit:

https://rtcamp.com/tutorials/linux/increase-open-files-limit/

More great tutorials:

https://rtcamp.com/tutorials/

Advanced tuning:

https://calomel.org/

Pingback: The Weekly WordPress News, Tutorials & Resources Roundup No.73

This is amazing test. I haven’t known about the microcaching in nginx, but I’ve been using pagespeed and it’s very fast. Can you share how to create a test with graphics like you did? I just want to check my configuration, test its performance but don’t know how to check that.

Thank you very much.

All of the graphs were created by 🙂

Pingback: WordPress VIP learning, Javascript in core, and changing lives with WordPress

Hi,

Great post !

I highly recommend using a cache system.

Server-side as Varnish or Redis but you have to configure the purge policy. With Varnish you can manage ESI (Edge Side Include) for partial caching.

Front-side (a caching plugin) as WP Rocket (disclaimer I’m the cofounder), and mounting the cache folder directly on the ram.

curious what type of site you tested this on, 40 million hits a day is quite a lot of traffic, must be in the top 200 of alexa at that rate.

The idea of 40M hits/day comes from the load it was able to sustain and the stats from Blitz based on load testing. I don’t actually have a site getting that much traffic (wouldn’t that be nice!).

Pingback: Rebuilding all of my websites - Waking up in Geelong

Kevin,

Instead of ugly one-liners 🙂 , use Ansible to create extremely easy to read, yet graceful and idempotent playbooks.

Ansible is great because unlike Chef/Puppet, it doesn’t require anything special on the managed servers(other than ssh) and the master computer doesn’t need much beyond python and a few support libs.

You’ll be up and running with basic code in a couple of hours and pretty much following best practices and in a couple of days.

If you want to convert the one liners to ansible, I would be happy to have a folder on the project with Ansible setups, please contribute them! http://github.com/kevinohashi/WordPressVPS

Pingback: TechNewsLetter: Vol2 | Vishnu-Tech-Notes

You can make Apache just as fast with Mod Cache. It’s the kernel’s sendfile syscall that is working it’s magic. When Apache and NGiNX are serving those files from the page cache, instead of copying everything twice from userspace to kernelspace and back (seek, read, write syscalls) , it’s straight from the page cache* to the network socket for subsequent requests.

* = http://www.linuxatemyram.com

I’ll never forget a co-worker of mine, back in the late 90’s, freaking out that his first Linux install was hogging all the ram. LOL!

Excuse the misspellings above. I’m testing Simon’s voice dictation plugin.

I am using Magento. There is no doubt fastcgi_cache is boosting the request from hundreds to thousands. The main problem I got is related to things which don’t have to be cached. For example I am trying to add a product into comparison list. I can’t. I see cached messages in other pages. Can you help? For sure using fastcgi_cache needs some rules for no_cache. One thing is clear, Varnish is not performing like fastcgi_cache. Such of configuration could replace Varnish as full page cache in Magento. Please help with no caching.

I haven’t really used Magento much, so it’s hard for me to offer any specific rules to help with your issue. It might be worth asking on StackOverflow and give example URLs at least to help people understand what data is being passed and how.

Kevin thanks for this great article, it is nice to see people like you who understands the subject and are able to share it with the rest of us.

I did a similar setup using Amazon’s new T2.micro instance which is free for the first year (if you are a new customer) using LEMP configuration with some added configuration, call it my special recipe, and I made the Amazon AMIs available to everyone. I even included a step by step instruction on how to create an Amazon AWS account, setting up billing alerts to keep it under control if you get crazy popular and so.

It’s at I would appreciate any comments you might have.

WordPress is a great tool for enabling everyday users to express their ideas and businesses on the Internet, making it available at a minimum cost with maximum performance is my goal. I hope that I managed to contribute to this with Purdox.com

Best regards,

Tony

Heya! Awesome guide. I’m no stranger to Linux, Nginx, or WordPress, so I found it pretty fascinating to add the microcache part. That said, I’m getting really confused with what I should put for try_files in my nginx conf to change the permalinks from the default /?p=123 to something meaningful like /post-name … Right now, if I just change the permalink option in WordPress , I will get an Nginx 404. The only way that actually works is on the default.. I tried installing the nginx helper plugin, which supposedly isn’t needed for WordPress 3.7 or higher.

Normally to fix this I would just put something like try_files $uri $uri/ /index.php?$args; but I am not sure what to do at this point. Where would you put it, for example, in the nginx config in your github example?

Honestly off the top of my head, I’m not sure. I’m doing everything in /etc/nginx/sites-available/default and I’d probably put it in that location / {} area. but I haven’t tried or tested this. if it works, please let me know!

I have the same question…

After getting a basic wordpress installation running, I’m realizing I’m unable to use any of the pretty permalinks. What would I add to the nginx configuration to work with this? Thanks in advance to anyone if they have figured this out.

Haven’t tested this, but try adding:

try_files $uri $uri/ /index.php;

to your location block in the config.

Thank you for the response, I actually just figured it out.

I had to comment out the existing “location / {}” block. I added a new location block before the “location ~ \.php${}” block with the following:

try_files $uri $uri/ /index.php?$args;

cheers!

When i login to my admin panel for the time of caching all customers that got to the website sees my admin login top menu.. Any way to eliminate from caching admin pages?

Try adding ‘cache exceptions’ from this article to your config:

https://www.digitalocean.com/community/tutorials/how-to-setup-fastcgi-caching-with-nginx-on-your-vps

I added the cache exceptions, which allowed me to login to my site without having to wait for the cache to expire. However, the admin bar is still being served. Any advice?

Did you restart+purge caches? I am going to need to find some time to sit down and update the code and test it out to really add much more to this discussion.

Hey Kevin, this guide is awesome. But I really having some trouble using it in real life. The website is really fast and I could replicate your results, but if you actually use it with wordpress you get a lot of errors. Like that the visitors see the adminbar, that the wordpress admin panel is not updated (You accept comments and they still show as not accepted) and several others. I have tried to add the exceptions but it still seems that it is caching sessions. This is an awesome guide with amazing value, but its really a problem that its not working properly in real life, as it makes wordpress pretty unusable. Do you have any plans on updating this soon? I wish I could help but I am really just starting out with VPS

Jascha,

You’re not wrong. It was a bug in the initial setup. I’ve since fixed it on GitHub if you want to give it a try again and let me know if you have any issues.

When i try this out and proceed to restart my nginx server (digitalocean installation), I get a fail. On nginx -t the results are “nginx: [emerg] invalid keys zone size “keys_zone=microcache:10m$” in /etc/nginx/ngi nx.conf:16″

I suppose it is supposed to be 10ms!?

it’s 10 minutes

Hola, excelente artículo, he realizado todos los pasos y el cache es excelente.

Tengo un problema, cuando se ejecuta “php-fpm pool:www” (no-cache) el uso del cpu es de 20% a 25%, el uso de CPU de php-fpm es demasiado.

¿alguna sugerencia para bajar el uso de cpu?

Solo puedo tener 4 usuarios concurrentes sin cache.

Hi, excellent article, I have completed all the steps and the cache is excellent.

I have a problem when running “php-fpm pool: www” (no-cache) cpu usage is 20% to 25%, CPU usage is too php-fpm.

Any suggestion to lower cpu usage?

I can only have 4 concurrent users without cache.

honestly i’m not sure, is this when it’s idle or serving requests?

When serving a request.

When accessing the website (no cache), a request “www php-fpm pool” is created. The CPU usage for the request is 20% (for 1 request – one load page)

IE:

I access to website testing.hostingroup.com

In server:

php-fpm pool:www 20% CPU usage

I accesso to testing.hostingroup.com/article/

In server:

php-fpm pool:www 22% CPU usage.

This happens when is an request without cache.

1. Disable gzip or set the compression to 1 -> it can smash the cpu @ really high load. On a tiny vps it hurts.

2. Use the gzip_static module for nginx. If you pre-gzip your css/js/html or what ever, nginx will check for the .gz version of the file in the same folder and send out instantly.

3. Make a small tmpfs ‘ram disk’ in linux/bsd via fstab. Point the fastcgi_cache to store the data in that tmpfs mount. Now all the cached data is served from ram.

If you’re into security the folks over at hardenedbsd . org are working on a hardened version of freebsd. I”m running a “Hemp” stack now and its impressive even with debugging on.

Those having issues with php-fpm search around for a tweaked php-fpm.conf. The default config is horrible. I’m not on a dev rig atm and don’t have access to any of mine.

Hey Kevin,

We Nginx PageSpeed and PSOL (PageSpeed Optimization Libraries) on our server and it’s running extremely stable and very fast. Our services run under 600ms according to pingdom.com . You might have apache and nginx running side by side, which can cause performance issues and cache problems as well.

I had so much hope for this. I’ve been working off this as a starting point for creating a great WordPress server, however, now that it’s live I’ve learned this is not a good approach at all. By the author’s own admission, “if you wanted to roll your own WordPress VPS and serve a lot of static content, this template should be a pretty good starting point” (emphasis added), this is only good for a static website. For anyone using WordPress as a CMS and logging in and updating the site this will be a very poor solution. I’m guessing this is mostly everyone. As pointed out by previous comments the WordPress admin bar does get cached and served to the general populous. There are also various other concerns as well. Take my advice, if your using WordPress as a CMS, look elsewhere.

I’ve fixed that bug with caching. What other concerns do you have?

Hi Kevin, thanks for sharing.

Is this method expandable to multiple domains on a server? I’m sure that it is with a small amount of tweaking. Have you considered a short writeup of this?

Andrew,

I haven’t and quite honestly I don’t have the time right now to figure it out. Definitely something to consider in the future though!

I’ve got it working in Ubuntu but what would i need to do to set this up on Debian? Please help thank you.

I don’t know Debian honestly. I generally use Ubuntu or CentOS/Red Hat. The biggest thing would probably be the nginx config. If you can copy it from Ubuntu to Debian I would think it would work but I have no way of knowing if that’s true without trying it.

hello, thank you for your great article.

I did all the config your said and the site’s speed is great. but unfortunately the php-fpm is using a lot of resorces (mostly cpu) which means the website can only handle up to 1,000 users per minute and after that it goes slow! my server has 16GB ram, E3 CPU and SSD hard drive. can you please give me a good example of php-fpm config for a wordpress site?

thank you

Sully,

I honestly don’t have any php-fpm config to help you out with. Once you’re at that level, it probably requires someone to come in and look at your configuration specifically or talking to your web hosting companies to see if they can help tune it more.

@Kevin: you made may day, my week, my month!

I have tried hard with cluster configuration without success. Your script alone help a single $10 VPS run 40x faster than mine.

Thanks a lot mate

Glad I could help. Make sure you’re running the latest from GitHub as there were some caching fixes

Thanks for the reminder, Kevin.

Indeed, there are caching issues with the scripts in this page, but the ones in GitHub work like a charm

Cheers,

@Kevin: did you encounter the issue that nginx cached only the front page but not other pages?

I don’t recall having that issue, are you using the github code?

Yes, I did.

I have tried several times and experienced that same issues.

Using stress test

Nginx cache only mydomain.com/wordpress/

NOT mydomain.com/wordpress/?p=1

Try changing permalink structure to pretty urls. It might not be caching because it thinks it’s post/get data.

fastcgi_param SCRIPT_FILENAME $document_root$fastcgi_script_name;

without above, my site load blank page.

no error in nginx/error.log either.

otherwise, followed all your setup @github, all working BUT

how do I know if it is caching and is caching all WP pages ??

debian jessie nginx+php5-fpm

It caches whatever someone looks at. Easy way to check is reloading. Generally the first load will be slower (it has to actually load it once before caching). Then reload should be directly from cache. This assumes you’re logged out, if you’re logged in, I believe it bypasses caching.

Hi Kevin,

Can you please to help me and setup my dedicated server with 5 wordpress sites on it?

Thanks in advance

Alex G.

I’m sorry, I don’t provide support services. Perhaps you can talk to your hosting company about having them implement some changes.

i installed my 3 sites and using vernish cache it helped me , is there any more way to optimize ?

There’s always more ways to optimize, but a page cache like Varnish is probably the biggest gain for the least amount of work. Adding in some type of object caching through a plugin like W3TC, WP Super Cache or similar is another easy gain. Default is generally to disk, you could make it faster with something like Memcached or Redis.

I’m currently on blue-hosts and paying $25/monthly, for some reason i think i’m overpaying as my traffic is really low. Should i switch to Digital Ocean or are there any better alternatives ?

That is a really general question. Digital Ocean is an unmanaged VPS which is nothing like Blue Host which is known for managing shared hosting. Are you trying to save money? Get better performance? Learn how to manage a server? It’s hard to make a recommendation without understanding what you’re trying to accomplish.

Nice site. I tried adding you on FB as I had no other way of trying to get a hold of you, as I wanted to get in on these benchmark reviews :). Whoever talked about open file limits, is correct. The more you have the more you can serve. Using basic Apache 2.4 with Mod_LSAPI, as long as you have a decent WP Caching System (I use WP Super Cache) you can achieve similar results. So here is over 40 Million hits a day, on a CloudLinux Shared Hosting Server, where we run pure Raid10 SSDs, and a few other tweaks…

https://www.blitz.io/report/67460373654e64335174bfbaa0a4b3fa

If you need a 1 Core VPS (SSD) to mess around with, give me a shout, as I love this stuff!

Have a great day.

You can contact me privately from the contact page – https://reviewsignal.com/contact if you want to participate in something involving Review Signal.

Pingback: 40 Million hits a day on WordPress using a $10 VPS | WPShout

Kevin, I like your article, just a few questions to add more validity to the test results.

1. How many records in the db (post table)?

2. Average number of postmeta per post?

3. how many different pages were loaded at the same time during the 60second run?

4. how many connections per page were made?

kind regards

Andrew

It’s all just hitting a static cache (nginx). Blitz is setup to hit 1 page. You can see everything in the GitHub repo (https://github.com/kevinohashi/WordPressVPS) including test results directly from Blitz (PDFs)

Looks Pretty Awesome! Going to try this on a Digital Ocean Droplet next week.

I would severely recommend using tmpfs for this.

tmpfs /var/cache/fastcgi tmpfs defaults,nodev,nosuid,noexec,noatime,nodiratime,uid=http,mode=1770 0 0

This should do the trick

(make the http be the user your distro uses for nginx)

So I followed the guide here: https://www.atlantic.net/community/howto/install-wordpress-nginx-lemp-ubuntu-14-04/ to setup wordpress on ubuntu, then followed your guide to add the microcaching script, and I saw zero performance boosts. Any idea what I did wrong?

I’m sorry, I don’t really know. Probably a question for serverfault/stackoverflow.

I use your setup for over a year and half now.

Is there any update on it?

I am not really maintaining this, you could use something like easyengine or serverpilot if you want to run it on your own server. Otherwise, there are plenty of managed options with great performance: https://reviewsignal.com/blog/2015/07/28/wordpress-hosting-performance-benchmarks-2015/

Just out of curiosity, did you try opcode caching in the fpm? I’d be interested to know how this would effect your benchmarks.

This is everything did. If you want to test that out and let me know how it goes I would love to see.

I know this test was done in 2014 but isn’t OPcache good enough? do I still need to install microcache?

maybe? depends what good enough means. opcode caching is at a different level than microcaching. microcache is a full page cache. opcode cache is still being executed in php. you can always use both 🙂

We have a new batch of servers coming in soon, well, one server, which will be running Linux VM’s. I’ll have to give this a try. Keen to see how it benchmarks against Apache with one of our clients.. I’ll let you know if it’s anything of interest! Thanks for the share 🙂

Hello

I read your article.

Can i optimize the server with theses steps?

But i do not use wordpress i use another cms called osclass.

Do you have a optimization document for server?

Or if i do all steps except wordpress part it wil work?

Thanks.

I don’t know anything about osclass, so it would be impossible for me to say if it will or won’t work unfortunately.

While this seems to be a great config for static websites, has anyone tried this with a CMS (more specifically WordPress). Which one is a better option LAMP or LEMP?

Haha, definetely suprised after testing these modifications 😀

Seriosly nginx is funny

Why not using nginx aio threads?

And to tune nginx with fcgi_cache much more:

don’t use *all* cpu cores if there are other servers like mysql, postfix,.. running on the same machine : worker_processes …; php-fpm needs also CPU cores. 😉

use sendfile on;

use reuseport: server { listen 80… reuseport;…}

use a tmpfs like /run/nginx for the fcgi-cache in RAM!

increase eg. the cache delete time if the files are not changed often like old static articles: …inactive=1440m

tune the fastcgi_buffers for your system and hardware 32|64bit

turn *off* the access_log but let the error_log on – also for static files like images,css, js… off the logs | or

buffer the access_log eg: access_log… buffer=8k;

use the open_file_cache

last but not least: switch to http2 with streams. 😉

I can’t find php7.0-fpm.sock in /var/run

i tried to change 127.0.0.1:80 address in nginx config to this address according to this solution: https://stackoverflow.com/a/22419567/1122900

but i could’t do it.

my site is down now by the way 😉

Serverpilot or Cloudways for WOOCOMMERCE shop, any feedback ? From which host did you migrate your shops ?

hello,

I am looking for some feebacks about Serverpilot or Cloudways as I am getting tired with DreamH dedicated servers (2010 old slow servers Intel(R) Xeon(R) CPU E5640 @ 2.67GHz).

I am thinking of Serverpilot or Cloudways but can we choose servers in EU and US ?

Are you hosting Woocommerce on Serverpilot or Cloudways ? Are you happy with it ? From where did you migrate your shops ?

Is the migration automatic ?

Thank you so much for your help.

It’s crazy how long this article has been on page 1 of Google for WordPress stacks… are you going to do a new comparison cuz some of these scripts are now dead mate.

What about SlickStack script (Bash only) for WordPress with MU plugins?

Yeah, I’m kinda surprised too. It’s way outdated, there are a lot of very clearly better options at this point. I don’t have any plans to do another one of these at the moment.