Photo Credit: _Abhi_

People are more likely to express negative sentiments or give negative reviews than they are positive ones.

I hear this in almost every discussion about Review Signal and how it works. There is certainly lots of studies to back this up. One major study concluded that bad is a stronger than good. One company found people were 26% more likely to share bad experiences. There is plenty of research in the area of Negativity Bias for the curious readers.

Doesn't that create problems for review sites?

The general response I have to this question is no. It doesn't matter if there is a negativity bias when comparing between companies because it's a relative comparison. No company, at least not at the start, has an unfair advantage in terms of what their customers will say about them.

Negativity bias may kick in later when customers have had bad experiences and want to continually share that information with everyone and anyone despite changes in the company. Negative inertia or the stickiness of negative opinion is a real thing. Overcoming that is something that Review Signal doesn't have any mechanism to deal with beyond simply counting every person's opinion once. This controls it on an individual level, but not on a systemic level if a company has really strong negative brand associations.

What if a company experiences a disaster, e.g. a major outage, does that make it hard to recover in the ratings?

This was a nuanced question that I hadn't heard before and credit goes to Reddit user PlaviVal for asking.

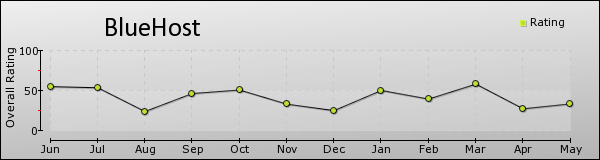

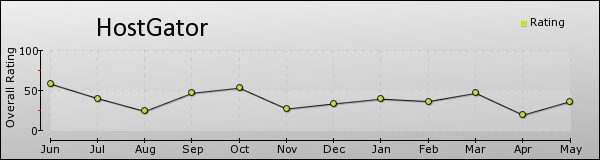

Luckily, major outages are a rare event. They are fascinating to observe from a data perspective. The most recent and largest outage was the EIG (BlueHost, HostGator, JustHost, HostMonster) outage in August 2013. If we look at the actual impact of the event, I have a chart available here.

When I looked at the EIG hosts' post-outage, there really hasn't been a marked improvement in their ratings. Review Signal's company profiles have Trends tabs on every company which graph on a per month basis to see how a company is done over the past 12 months.

There is definitely some variance, but poor ratings post-outage seem quite common. It's hard to make an argument that these companies have recovered to their previous status and are simply being held back by major outcries that occurred during the outage.

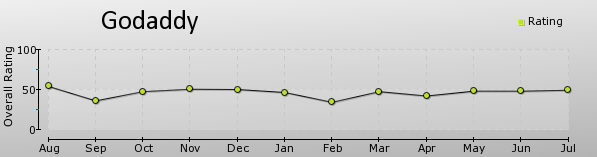

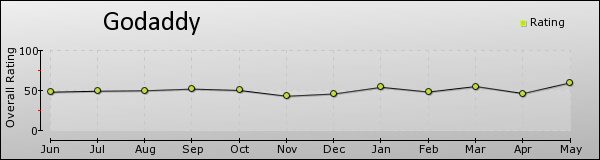

The only other company with a major outage I can track in the data is GoDaddy. GoDaddy have had numerous negative events in their timeline since we started tracking them. There has been the elephant killing scandal, SOPA, DNS outages and multiple super bowl events.

August 2012 - July 2013

June 2013 - May 2014

There are clear dips for events such as the September 2012 DNS Outage, the Superbowl in February. Their overall rating is 46% right now and the trend is slightly up. But they seem to hang around 45-50% historically and maintain that despite the dips from bad events. There is arguably some room to for them be rated higher depending on the time frame you think is fair, but we're talking a couple percent at most.

What about outages affecting multiple companies? eg. Resellers, infrastructure providers, like Amazon, who others are hosting on top of. Are all the companies affected equally?

No. Just because there is an outage with a big provider that services multiple providers doesn't mean that all the providers will be treated identically. The customer reaction may be heavily influenced by the behavior of the provider they are actually using.

Let's say there is an outage in Data Center X(DC X). It hosts Host A and Host B. DC X has an outage lasting 4 hours. Host A tells customers 'sorry, it's all DC X's fault' and Host B tells customers 'We're sorry, our DC X provider is having issues, to make up for the downtime your entire month's bill is free because we didn't meet our 99.99% uptime guarantee.' Just because Host A and Host B had identical technical issues, I imagine the responses from customers would be different. I've definitely experienced great customer service which changed my opinion of a company dramatically on how they handled a shitty situation. I think the same applies here.

Customer opinions are definitely shaped by internal and external factors. The ranking system here at Review Signal definitely isn't perfect and has room for improvement. That said, right now, our rankings don't seem to be showing any huge signs of weakness in the algorithms despite the potential for issues like the ones talked about here to arise.

Going forward, the biggest challenge is going to be creating a decay function. How much is a review today worth versus a review in the past? At some point, a review of a certain age just isn't as good as a recent review. At some point, this is a problem I'm going to have to address and figure out. But now, it's on the radar but it doesn't seem like a major issue yet.

Kevin Ohashi

Latest posts by Kevin Ohashi (see all)

- Analyzing Digital Ocean’s First Major Move with Cloudways - February 28, 2023

- Removing old companies - June 28, 2021

- WordPress & WooCommerce Hosting Performance Benchmarks 2021 - May 27, 2021

WordPress & WooCommerce Hosting Performance Benchmarks 2021

WordPress & WooCommerce Hosting Performance Benchmarks 2021 WooCommerce Hosting Performance Benchmarks 2020

WooCommerce Hosting Performance Benchmarks 2020 WordPress Hosting Performance Benchmarks (2020)

WordPress Hosting Performance Benchmarks (2020) The Case for Regulatory Capture at ICANN

The Case for Regulatory Capture at ICANN WordPress Hosting – Does Price Give Better Performance?

WordPress Hosting – Does Price Give Better Performance? Hostinger Review – 0 Stars for Lack of Ethics

Hostinger Review – 0 Stars for Lack of Ethics The Sinking of Site5 – Tracking EIG Brands Post Acquisition

The Sinking of Site5 – Tracking EIG Brands Post Acquisition Dirty, Slimy, Shady Secrets of the Web Hosting Review (Under)World – Episode 1

Dirty, Slimy, Shady Secrets of the Web Hosting Review (Under)World – Episode 1 Free Web Hosting Offers for Startups

Free Web Hosting Offers for Startups