Sending email should be simple. It's something I really don't like thinking about.

I ran into an issue where I wanted to send email from the command line in a bash script that helps power Review Signal. A notification when something goes wrong.

The only problem, I don't have any mailing program installed. I also don't really want to send email from my servers because making sure they get through isn't something I want to spend a lot of time thinking about.

The first solution that jumped to mind was SendGrid, who I had the pleasure of trying out their API at PayPal BattleHack DC. It was dead simple. I had integrated it into PHP though. I didn't know if it worked from the command line.

I checked the docs and found they had a rest API.

curl -d 'to=destination@example.com&toname=Destination&subject=Example Subject&text=testingtextbody&from=info@domain.com&api_user=your_sendgrid_username&api_key=your_sendgrid_password' https://api.sendgrid.com/api/mail.send.json |

If you want to clean it up with variables:

#!/bin/sh SGTO=receiver@example.com SGTONAME='Some Name' SGSUBJECT='Email Subject' SGFROM=from@example.com SGTEXT='Email Text' SGUSER=user SGPASS=password curl -d "to=${SGTO}&toname=${SGTONAME}&subject=${SGSUBJECT}&text=${SGTEXT}&from=${SGFROM}&api_user=${SGUSER}&api_key=${SGPASS}" https://api.sendgrid.com/api/mail.send.json |

Voila! Sending emails from my bash script is now simple.

WordPress & WooCommerce Hosting Performance Benchmarks 2021

WordPress & WooCommerce Hosting Performance Benchmarks 2021 WooCommerce Hosting Performance Benchmarks 2020

WooCommerce Hosting Performance Benchmarks 2020 WordPress Hosting Performance Benchmarks (2020)

WordPress Hosting Performance Benchmarks (2020) The Case for Regulatory Capture at ICANN

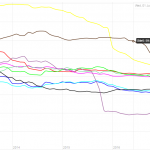

The Case for Regulatory Capture at ICANN WordPress Hosting – Does Price Give Better Performance?

WordPress Hosting – Does Price Give Better Performance? Hostinger Review – 0 Stars for Lack of Ethics

Hostinger Review – 0 Stars for Lack of Ethics The Sinking of Site5 – Tracking EIG Brands Post Acquisition

The Sinking of Site5 – Tracking EIG Brands Post Acquisition Dirty, Slimy, Shady Secrets of the Web Hosting Review (Under)World – Episode 1

Dirty, Slimy, Shady Secrets of the Web Hosting Review (Under)World – Episode 1 Free Web Hosting Offers for Startups

Free Web Hosting Offers for Startups