This is one of the ways I improve performance here at Review Signal. I run an nginx reverse proxy and cache system in front of the apache server. Apache can be slow and doesn't have a built in caching system for a lot of the static content we serve. So I put Nginx in front to cache and serve all the content it can directly from memory. This improves the performance of my servers and users get their content faster. It also can help when there is a high load. If you want to see how it performs, I've included a screenshot from Blitz.io at the bottom showing how well Review Signal performs with 500 concurrent users.

The Nginx config (http, server):

http { proxy_redirect off; proxy_set_header Host $host; proxy_set_header X-Real-IP $remote_addr; proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for; # caching options proxy_cache_path /var/cache/nginx levels=1:2 keys_zone=my-cache:8m max_size=1000m inactive=600m; proxy_temp_path /var/cache/tmp; server { listen 80; server_name subdomain.example.com; access_log on; error_log on; location /{ proxy_pass http://localhost:3000/subdomain; } } server { listen 80; server_name example.com; access_log on; error_log on; location / { proxy_pass http://localhost:3000/; proxy_cache my-cache; proxy_cache_valid 200 302 60m; proxy_cache_valid 404 1m; } } } |

Please note that only the relevant parts to this article are included (http and server). You definitely need to add more options to the config before http and in your http section. I didn't include those parts because they can vary a huge amount. See the Nginx documentation for more details and example configurations.

Configuration Walkthrough:

proxy_redirect off; proxy_set_header Host $host; proxy_set_header X-Real-IP $remote_addr; proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for; |

proxy_redirect off tells the server we aren't redirecting content. We actually do that later with proxy_pass. The headers we set allow you to see proper header information on the server you are proxying to. Without X-Real-IP/X-Forwarded-For the server will simply see your reverse proxy server's IP address.

proxy_cache_path /var/cache/nginx levels=1:2 keys_zone=my-cache:8m max_size=1000m inactive=600m; proxy_temp_path /var/cache/tmp; |

The first line tells nginx where to save cache data (path), the structure of the cache data (levels), names your cache and it's size (keys_zone), max cache size (max_size) and how long before cached data is expired (inactive). The second line tells nginx where to save temporary data which it uses in building the cache.

server { listen 80; server_name subdomain.example.com; access_log on; error_log on; location /{ proxy_pass http://localhost:3000/subdomain; } } |

This server code creates a reverse proxy to localhost:3000. It doesn't do any caching, it simply forwards all requests between Nginx and the localhost:3000. It is listening to subdomain.example.com and any request to it (/) are passed to localhost:3000/subdomain.

server { listen 80; server_name example.com; access_log on; error_log on; location / { proxy_pass http://localhost:3000/; proxy_cache my-cache; proxy_cache_valid 200 302 60m; proxy_cache_valid 404 1m; } } |

This server is a reverse proxy and cache. We're responding to any request to example.com. It's forwarding all requests to localhost:3000. It also is creates a cache called my-cache (notice this matches the proxy_cache_path keys_zone setting). proxy_cache_valid defines what HTTP codes can be cached and for how long. So in this example 200 (OK) and 302 (FOUND) are cached for 60 minutes. 404 (NOT FOUND) is cached for 1 minute.

Conclusion

Setting up a reverse proxy isn't too difficult. However, it can be complicated to get it working with your application on occasion. You can empty the cache manually by deleting all the contents in the cache folder. That often helps fix issues. Nginx is fairly smart and when you pass post data, it doesn't serve cached pages, but get/head data will be cached by default. This setup works great when you serve a lot of static content. I run it in front of almost everything here at Review Signal including our blog. It's easy enough to configure different caching levels for different parts of your application. And if you're ever in doubt, test the application directly, then just reverse proxy without caching, and then turn on caching.

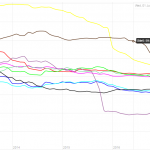

As promised, here is what Review Signal's performance looks like when rushing with Blitz.io from 1-500 concurrent users with this nginx setup. It responds to around 300 concurrent requests without going over 100ms response time.

WordPress & WooCommerce Hosting Performance Benchmarks 2021

WordPress & WooCommerce Hosting Performance Benchmarks 2021 WooCommerce Hosting Performance Benchmarks 2020

WooCommerce Hosting Performance Benchmarks 2020 WordPress Hosting Performance Benchmarks (2020)

WordPress Hosting Performance Benchmarks (2020) The Case for Regulatory Capture at ICANN

The Case for Regulatory Capture at ICANN WordPress Hosting – Does Price Give Better Performance?

WordPress Hosting – Does Price Give Better Performance? Hostinger Review – 0 Stars for Lack of Ethics

Hostinger Review – 0 Stars for Lack of Ethics The Sinking of Site5 – Tracking EIG Brands Post Acquisition

The Sinking of Site5 – Tracking EIG Brands Post Acquisition Dirty, Slimy, Shady Secrets of the Web Hosting Review (Under)World – Episode 1

Dirty, Slimy, Shady Secrets of the Web Hosting Review (Under)World – Episode 1 Free Web Hosting Offers for Startups

Free Web Hosting Offers for Startups